High Availability: Ensure Continuous Data Availability

In a global digital economy, companies are increasingly reliant on an IT infrastructure that is fully operational around the clock. Downtime can result in unhappy customers, lost profits, and interruptions to business operations. Plus, with more and more organizations embracing a hybrid work model, you need to ensure that employees can access the resources they need from anywhere, at anytime.

While you can’t eliminate the risk of downtime, you can take steps to strengthen your network systems and infrastructure. In this guide, we discuss how to implement high availability architecture so that you can maintain uninterrupted access to critical business data.

What Is High Availability?

In the field of information technology, high availability is a system’s ability to operate without service interruptions for a specific period of time. A high availability system isn’t immune to failure. Rather, it has enough safeguards in place to address problems and ensure system uptime in the event of a failure.

High availability is measured as a percentage. A 100% rating would indicate that a system never fails or experiences downtime. But because that’s nearly impossible, most vendors will guarantee a certain level of availability or uptime in their service level agreements (SLAs):

- Two nines: 99% uptime

- Three nines: 99.9% uptime

- Four nines: 99.99% uptime

- Fives nines: 99.999% uptime

The difference between two nines and three nines could seem minimal until you think about how that translates into downtime. 99% availability could lead to more than 72 hours of downtime in a year. In contrast, 99.999% availability equals less than six minutes of downtime annually.

According to Atlassian, cloud vendors like Amazon Web Services (AWS), Google, and Microsoft set their cloud SLAs at 99.9%. Five nines availability is the goal for many IT teams, but it can be difficult to achieve this state of near-zero downtime.

What Are the Key Components of a High Availability Structure?

A high availability system needs a few key components in place to work properly. These include:

- Load balancing:

This is a computing process that distributes network traffic to optimize performance. A specialized server known as a load balancer intercepts requests and assigns them to different backend servers. - Failover:

To ensure high availability, a system must be able to monitor itself and know when a failure occurs. When regular monitoring shows that a primary component isn’t working properly, the system recognizes the failure and a secondary component takes over. This transfer of workload after failure detection is known as failover. - Redundancy:

In simple terms, this means duplicating critical components to improve a system’s reliability. For example, you might have two servers with duplicate data so that if one fails, the other can take over. Redundancy can both improve system performance and put safeguards in place in case components malfunction.

Types of High Availability Concepts

There are different approaches to minimizing downtime. Two common models are:

- Active-active:

In an active-active high availability cluster, network traffic is shared across two or more servers. The goal is to achieve load balancing. Instead of connecting directly to a specific server, web clients are routed through a load balancer that uses an algorithm to assign the client to one of the servers. This helps any one network node from getting overloaded. - Active-passive:

Also known as active-standby, this type of high availability cluster also has at least two servers. When one is active, the other is on standby. All clients connect to the same server. If the primary system fails, the passive server takes over.

If you maintain the same settings across all servers or nodes, the end user shouldn’t notice a difference between an active-active and an active-passive cluster. The difference between these two architectures is resource usage. Active-active clusters use all servers during normal operation. Active-passive clusters, in contrast, only use certain servers in the event of a failure. In general, you’ll want to use an active-active model in use cases where a cluster is running multiple critical services simultaneously.

Both models rely on redundancies. Active-passive setups use N+1 redundancy, in which each type of resource has a dedicated backup component so that you always have sufficient resources in place for existing demand. Active-active systems have 1+1 redundancy, in which backup, redundant components are always in operation. If a single point of failure occurs, parallel active resources take its place.

Disaster Recovery

Disaster recovery refers to an enterprise’s ability to respond and recover when a disruptive event occurs. Disasters can be manmade, such as a cybersecurity breach, or natural, such as a flood that damages equipment. All organizations should have an established disaster recovery plan that outlines the steps they will take to prepare for and respond to such events. In the context of network systems, the goal of a disaster recovery protocol is to bring critical services back online as soon as possible.

A disaster recovery plan should include:

- An up-to-date inventory of all existing resources

- Locations of data backups, especially for sensitive data like intellectual property (IP) or client personally identifiable information (PII)

- Documentation of internal and vendor-provided recovery strategies

- Prioritization for bringing key services or functions back online

High availability and disaster recovery are similar in that they both aim to keep your systems working effectively and minimize downtime. The key difference is that high availability is focused on addressing single points of failure that arise while a system is running. Disaster recovery, in contrast, addresses problems after the entire system fails.

Fault Tolerance

Another key aspect of a high availability system, fault tolerance is a system’s ability to continue operating correctly when one or more components fail. Fault-tolerant networks have built-in redundancies so that any single point of failure doesn’t take down an entire system. This helps ensure high system availability through measures such as:

- Alternative power sources, such as a generator

- Equivalent hardware systems

- Backup software instances

A fully fault-tolerant system has no service interruption. For many organizations, however, the costs of maintaining complete fault tolerance may be unattainable, since you will need to constantly maintain instances of the same workload on two or more independent server systems.

Best Practices for High Availability Systems

To optimize operational performance and reliability, use these best practices for testing and maintaining high availability solutions at your organization:

- Set your goals:

Every enterprise and instance is different. Three nines might be sufficient in one setting and unacceptable in another. Start by defining your maximum allowable system downtime, which will help guide your system architecture. And communicate the true cost of downtime, from lost revenue to a decreased customer basis, should you need to justify the cost of additional infrastructure. - Use multiple application servers:

Deploying your mission-critical applications over multiple servers establishes needed redundancies. If one server is overburdened, it can slow down significantly or even crash. When key apps are available over multiple servers, you can redirect traffic to an operational server so that the end user doesn’t experience an interruption in real time. - Establish geographic redundancy:

You should never collocate your physical servers. Instead, spread network servers over multiple physical data centers to provide added protection from power outages caused by natural disasters. Whether your backup servers are located in a different town, state, or even another country, you’ll have a line of defense against disasters like earthquakes, fires, and floods, as well as brute-force attacks. - Implement load balancers:

Using load balancing allows you to automatically redirect traffic if a server failure is detected. Think of a load balancer like a traffic cop. They sit between end users and your company’s servers. When a client request comes in, the load balancer chooses which server to direct it to. This ensures uninterrupted access to critical applications and services while being careful not to overload any one server. - Set a Recovery Point Objective (RPO):

A major outage can mean prolonged loss of access to your data. Your RPO is the maximum amount of data that your enterprise will accept losing, expressed in time. Some organizations set their RPO to 30 minutes; others aim for 60 seconds or less. This is highly dependent on the type of data you manage and your industry. For example, a hospital needs uninterrupted access to electronic health records, while a small startup might be able to tolerate an hour-long outage. - Know your time objective:

Similar to an RPO, a Recovery Time Objective (RTO) measures the maximum amount of time allotted to restore normal operations after a service disruption or incident. RTOs are measured in seconds, minutes, or hours, and may differ across applications or services depending on their importance. - Run test scenarios:

Once your IT team has established your RPO and RTO metrics, you should test your operating system’s ability to recover. Practice transferring network services, switches, and other key infrastructure to their backup components.

High Availability in the Cloud and/or Hybrid Cloud Scenarios

Today’s distributed workforces are heavily reliant on cloud computing to achieve their business objectives. In an ideal world, moving to the cloud ensures that your applications and tools are available to any end user with an internet connection. Implementing high availability solutions in the cloud, however, presents a unique set of challenges.

Cloud computing relies on software as a service (SaaS) and Infrastructure as a Service (IaaS), which can limit your IT team’s ability to configure certain applications or instances. And unlike on-premise data centers, cloud data storage can expose enterprises to additional risk. A data breach can negatively affect your company’s reputation, cause you to lose customers, or expose you to litigation or regulatory issues.

Despite the inherent risks, more and more industries are turning to cloud-based solutions to address the challenges of flexibility and scalability. Common cloud-based high availability solutions include:

Multi Region Replication

Multi-region replication is a strategy for ensuring availability by making exact copies of data or instances across several distinct locations. This approach addresses disaster recovery planning, as well as failover, because normal operations can continue even if one site experiences an issue.

While multi-region replication increases storage requirements, it also offers the benefit of lower latency and better performance, since users in each region can access data locally. Plus, it’s a good solution for growing businesses, as you can add or remove storage sites without interrupting operations.

Auto-Scaling

Demand for cloud resources may fluctuate unexpectedly. To handle these demand spikes, you can rely on cloud scaling:

- Horizontal scaling:

Also known as scaling out, this approach adds nodes or machines to handle increased demand. - Vertical scaling:

Also called scaling up, vertical scaling avoids slowdowns by adding more processing power. Increasing capacity could require upgrading storage, memory, or network speed.

Because cloud service providers offer IaaS, horizontal and vertical scaling can be built in as automated processes. Both options offer advantages when it comes to costs: horizontal scaling requires more investment upfront, whereas the initial investment for scaling up is minimal. However, as the need for more hardware increases, vertical scaling can cause costs to increase exponentially.

Frequently Asked Questions

What is high availability in IT?

High availability refers to a system’s ability to remain operational without interruption for a specified period, even during component failures. It involves load balancing, failover mechanisms, and redundancy to minimize downtime.

What are the key components of a high availability system?

The main components include load balancing to distribute traffic, failover systems that switch to backups during failures, and redundancy to ensure critical services remain available.

What is the difference between active-active and active-passive architecture?

Active-active clusters run multiple servers simultaneously for load balancing, while active-passive clusters have one active server and one on standby, only switching when the primary fails.

How is disaster recovery different from high availability?

High availability aims to prevent service interruptions by avoiding single points of failure, while disaster recovery focuses on restoring systems after a total failure or catastrophic event.

What is fault tolerance in high availability systems?

Fault tolerance refers to a system’s ability to continue functioning properly even if one or more components fail, through built-in redundancies like backup servers or power sources.

What are best practices for high availability?

Key practices include defining RPO and RTO, using multiple servers, implementing geographic redundancy, load balancing, regular system testing, and setting recovery goals.

How does cloud-based high availability work?

Cloud-based high availability uses strategies like multi-region replication and auto-scaling to ensure minimal downtime and optimized performance across distributed environments.

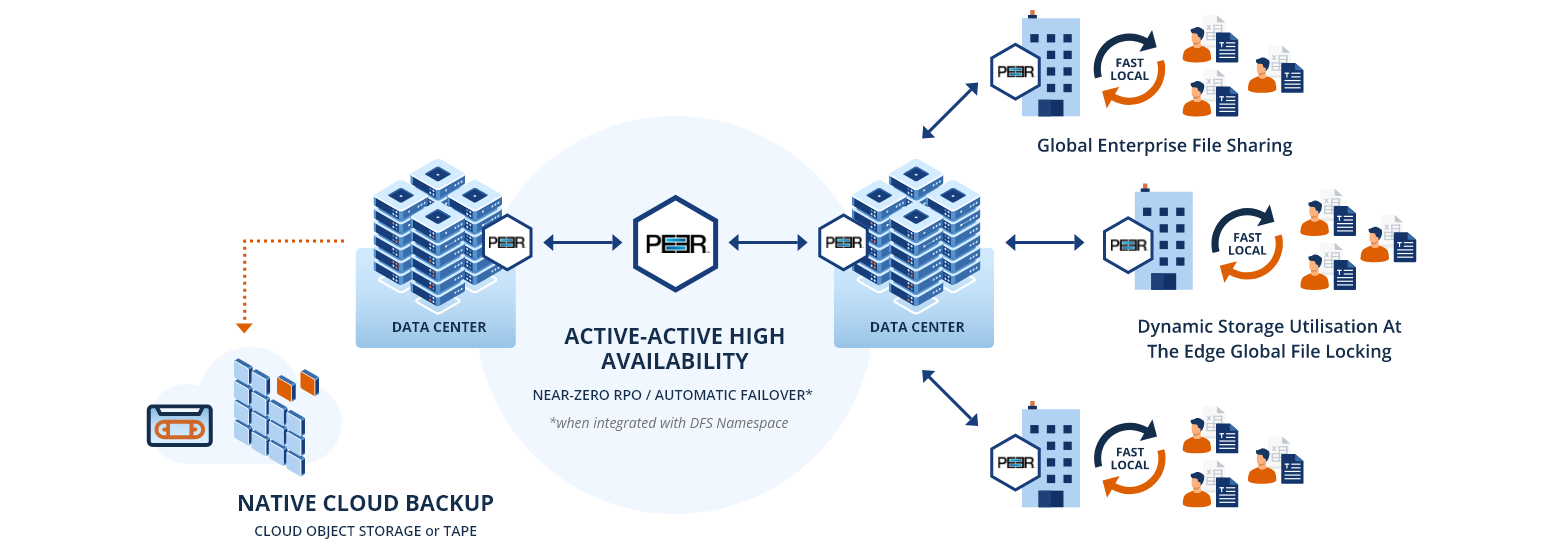

Choose Peer Global File Service (PeerGFS) for High Availability, File Sharing, and Fault Tolerance

Maintaining uninterrupted access to data and business continuity requires a multifaceted approach. With a set of safeguards in place, you can keep your business up and running while ensuring a seamless experience for your employees and customers. To achieve the level of availability and fault tolerance your business needs to succeed, partner with Peer Software. Our distributed file system, PeerGFS, helps your team simplify the data backup process across cloud and on-premise storage. PeerGFS offers:

- Distributed file locking to prevent version conflicts

- Active-active synchronization to replicate data in real-time across regions

- Dynamic storage utilization to control unstructured data growth

- Support for native file and object formats

- Integration with leading storage vendor platforms

Peer Software boasts over 25 years of experience in geographically dispersed file replication and management, helping customers ensure data protection, availability, and productivity across the enterprise. For more information, request a trial of PeerGFS or contact our team today.

Cesar Nunez

A twenty-year veteran within the IT industry, Cesar's love of technology took him quickly from his start as a desktop support technician at a small startup to support engineer for a spell at Microsoft. For the past 15 years Cesar has held roles supporting Enterprise customers focused primarily in the storage space, aiding in configuration, knowledge transfer, and technical assistance, as the subject matter expert for various technologies.

At Peer Software, Cesar provides technical leadership to the global engineering team, as well as assists customers with deployment and configuration of PeerGFS, Peer's Global File Service for multi-site, multi-platform file synchronization.

- This author does not have any more posts.